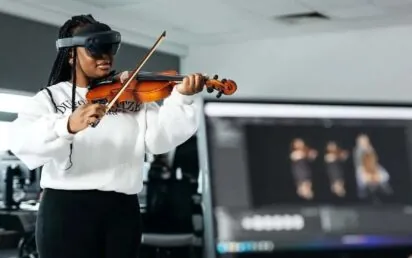

Researchers from the University of Birmingham have developed a platform that allows musicians to practise, collaborate and perform in the company of virtual avatars.

The Joint Active Music Sessions (JAMS) platform uses avatars created by individual musicians and shared with fellow musicians to create virtual concerts, practice sessions, or enhance music teaching.

JAMS has been developed with and for musicians, whether successful or at an early stage of learning.

“A musician records themselves and sends the video to another musician. The software creates a responsive avatar that plays in perfect synchrony with the music partner,” said Dr Massimiliano Di Luca from the University of Birmingham.

“All you need is an iPhone and a VR headset to bring musicians together for performance, practice, or teaching.”

Birmingham researcher Diar Abdlkarim is at the NAMM show in the US next week to introduce the JAMS platform to the music industry.

The platform says that the avatars capture the unspoken moments that are key in musical performance, allowing practice partners or performers to watch the tip of the violinist’s bow, or make eye contact at critical points in the piece.

The avatars have real-time adaptability and are responsive to the musician on the VR headset, allowing it to deliver a unique, personalised experience.

There is also no ‘latency’ in the JAMS user experience. Dr Di Luca added: “Performers can start to feel the effects of latency as low as 10 milliseconds, throwing them ‘off-beat’, breaking their concentration, or distracting them from the technical aspects of playing.”

JAMS allows musicians to perform in an interactive virtual group, and can be adapted for lip-syncing or dubbing in media.

It can also gather unique user data to create digital twins of musicians.